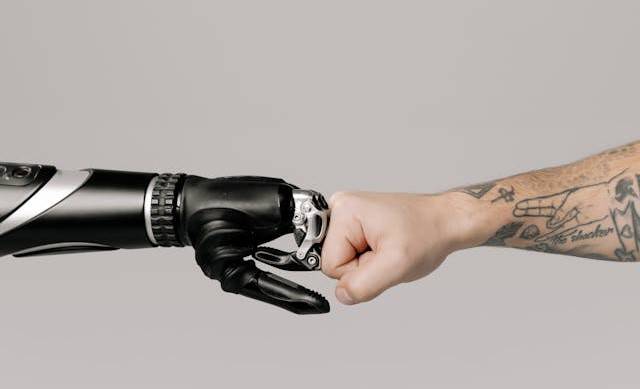

As difficulty in distinguishing between machine-generated and human-created content/identity increases, we enter unexplored territory regarding who or what to trust in the digital age.

On the internet, our digital identities have become as significant as our physical ones. For some of us, real dollars will go into acquiring and customizing avatars or the digital representations of ourselves in virtual environments like The Sandbox or Minecraft.

A majority of us don’t think twice about creating online personas through social media profiles, and a growing number are gradually engaging in e-commerce, and managing our transactions online.

But besides this explosion of digital identities created by real humans behind a screen, another type of digital identity – created by non-human identities (NHI) – are also exploding onto the scene.

One category of NHI called bots, are automated programs designed to mimic human actions and take on digital identity functions. They can create accounts on social media, engage in conversations, and perform tasks that typically require human input.

While bots can streamline tasks like customer service and data entry, their potential for misuse also is alarming. They can fabricate identities, disseminate disinformation, manipulate online discourse, and perpetrate fraud, blurring the lines between human and machine interactions.

This has profound implications for trust and authenticity online.

Good bot, bad bot

Bots are prevalent because it is undeniable that they have enhanced our digital experiences. They provide round-the-clock support, facilitate seamless transactions, and connect us with global communities. However, this convenience comes at a cost. The pervasive presence of bots has cast a shadow of doubt over the veracity of online information.

Fraudsters are already taking advantage of AI-generated bots to spread misinformation, run scams, and create fake accounts and content that look and feel completely real, according to Ajay Patel, the head of World ID (read sidebar below for more info).

Prior to joining Tools for Humanity (TFH), Ajay had helped build the Google Payments Identity Team, focusing on verifying the digital identities of advertisers and expanding Google’s Know Your Customer (KYC) process.

Ajay Patel

He told CXposé.tech, “Honestly, it’s no surprise that people are struggling to tell what’s real and what’s fake anymore, no matter how cautious or aware you are, they always have ways to look legitimately true.”

For example, in 2023 scammers were found tracking consumers through social media, pretending to be airline employees, and offering fake refunds to pull off financial fraud.

To implicitly trust online reviews, news articles, or social media trends, would be foolish. There is a possibility that these are generated or influenced by bots. This decline in trust has a ripple effect, impacting everything from consumer choices to political discourse and social interactions.

“Honestly, it’s no surprise that people are struggling to tell what’s real and what’s fake anymore, no matter how cautious or aware you are, they always have ways to look legitimately true.” – Ajay Patel

A 2024 survey by Tools For Humanity (TFH) found that 71% of respondents in Colombia were worried that smarter, more convincing bots would lead to even bigger scams and misinformation problems. Meanwhile, in Mexico, nearly 9 in 10 people said they or someone they knew had been personally affected by online fraud or identity theft.

Societal impact

According to Amazon Web Services, 57% of content on the Internet is generated by artificial intelligence. (The term “bot-generated content” is sometimes used more broadly to include AI-generated content).

By 2025, it’s predicted that 90-99.9% of all online content will be AI-generated. An ongoing case study shows AI content in top Google search results reached 18.50% as of November 2024, continuing an upward trend.

This situation warrants attention – bots are getting smarter, and the content they generate has surpassed content generated by humans. Ajay even opined that as AI evolves, bots are blending in seamlessly to the extent of impersonating real people, authorities, and confusing the online space.

In summary, here are the potential risks of bots:

Raising awareness about the capabilities and potential dangers of bots may help and it must be complemented with digital literacy programs that equip users with skills to evaluate online information and identify bot activity.

Technology and advanced algorithms to detect bot-driven manipulations are useful to an extent; however governments and industry bodies must still do their part to establish clear guidelines and regulations for bot usage (which can be used interchangeably with usage of AI and automated content). For instance, transparency in bot operations, restrictions on activities that put privacy at risk, and bot operators’ accountability.

What is next?

The identity crisis is a pressing challenge that demands urgent attention. While technology plays a role in addressing this issue, eliminating bots entirely isn’t the answer.

Trust and authenticity must remain integral to our online interactions and experiences. The key challenge is to create systems that maintain the helpful aspects of bots while mitigating the harmful ones.