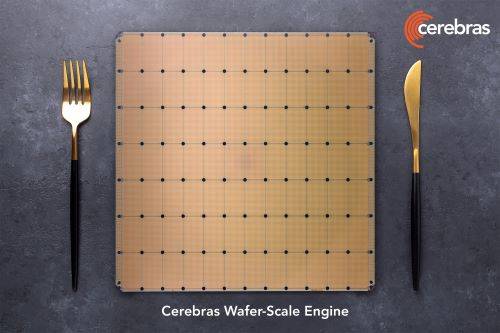

This past week, Cerebras created a world record by bringing to market the fastest AI chips with the introduction of the Wafer Scale Engine 3, or WSE-3.

We have heard the role GPUs have played in accelerating AI innovation, use cases and take up. But GPUs are not the only hardware platform capable of handling AI workloads.

According to Cerebras CEO and co-founder, Andrew Feldman, eight years ago the industry had said wafer scale processors were a pipe dream. Now into the third-generation of their wafer scale chip, WSE-3 is also the fastest AI chip in the world, “…built for the latest cutting-edge AI work.”

He added, “We are thrilled to bring WSE-3 and CS-3 to market to help solve today’s biggest AI challenges.”

Superior Power Efficiency and Software Simplicity

With every component optimized for AI work, CS-3 delivers more compute performance in less space and less power than any other system. While GPUs power consumption is doubling generation to generation, the CS-3 doubles performance but stays within the same power envelope.

The CS-3 offers superior ease of use, requiring 97% less code than GPUs for large language models (LLMs) and the ability to train models ranging from 1B to 24T parameters in purely data parallel mode.

A standard implementation of a GPT-3 sized model required just 565 lines of code on Cerebras – this is an astounding industry record.

Industry Partnerships and Customer Momentum

Cerebras already has a sizeable backlog of orders for CS-3 across enterprise, government and international clouds.

“As a long-time partner of Cerebras, we are interested to see what’s possible with the evolution of wafer-scale engineering. CS-3 and the supercomputers based on this architecture are powering novel scale systems that allow us to explore the limits of frontier AI and science,” said Rick Stevens, Argonne National Laboratory Associate Laboratory Director for Computing, Environment and Life Sciences. “Cerebras’ audacity continues to pave the way for the future of AI.”

The CS-3 offers superior ease of use, requiring 97% less code than GPUs for large language models (LLMs), as many as only 565 lines of code for a GPT-3 model.

“As part of our multi-year strategic collaboration with Cerebras to develop AI models that improve patient outcomes and diagnoses, we are excited to see advancements being made on the technology capabilities to enhance our efforts,” said Dr. Matthew Callstrom, M.D., Mayo Clinic’s medical director for strategy and chair of radiology.

The CS-3 will also play an important role in the pioneering strategic partnership between Cerebras and G42. The Cerebras and G42 partnership has already delivered 8 exaFLOPs of AI supercomputer performance via Condor Galaxy 1 (CG-1) and Condor Galaxy 2 (CG-2).

Both CG-1 and CG-2, deployed in California, are among the largest AI supercomputers in the world.

About Cerebras Systems

Cerebras Systems is a team of pioneering computer architects, computer scientists, deep learning researchers, and engineers of all types. They have come together to accelerate generative AI by building from the ground up a new class of AI supercomputer. Their flagship product, the CS-3 system, is powered by the world’s largest and fastest AI processor, the Wafer-Scale Engine-3. CS-3s are quick and easy to cluster to make large AI supercomputers that power the development of pathbreaking proprietary models, and train open-source models with millions of downloads.. For further information, visit https://www.cerebras.net.

(This article was adapted from a press release here).